G'day readers. Tonight's challenge was an upgrade to latest version of our MS 2FA servers so we can add another two on-prem 2FA servers to the cluster, eradicate our Freja appliance and replace with the Microsoft equivalent 2FA service. This will save our organisation a considerable sum of money and in the current climate of fidiciary restraint, this is a good thing!

We had already done the ground work with the installation of the two new 2FA servers onto fresh Windows OS installs and to add new hosts to the 2FA cluster, then the existing need to be at the same version level.

The upgrades were completed by removing one endpoint from the load balancing function after ensuring that the remaining 2FA instance would have the master designation.

Get-AzureVM -ServiceName <service_name> -Name <vm_name> | Remove-AzureEndpoint -Name <endpoint_name> | Update-AzureVM

This allows the quiesced 2FA host to be upgraded via the GUI, 'Check for Updates' actually did nothing so we were forced to download the latest 2FA version as per this:

https://docs.microsoft.com/en-us/azure/multi-factor-authentication/multi-factor-authentication-get-started-server

and run the MSI installer which is what the 'Check for updates' GUI action is supposed to do.

Running this MSI did indeed update the 2FA instance to the latest version, at which point a restart of the application then insisted that we also update the AD FS adapter. This all appeared to complete without any issues, not even a prompt for a restart of the OS. Time to test ADFS login still works and as we are still getting 2FA SMS messages, then the service is still authenticating users.

Next step is to add the updated 2FA host back to the load balancer set. Powershell ISE commands do the heavy lifting of the end point creation:

Get-AzureVM -ServiceName <service_name> -Name <vm_name> | Add-AzureEndpoint -Name <endpoint_name> -Protocol <protocol> -LocalPort <local_port> -PublicPort <public_port> -DefaultProbe -InternalLoadBalancerName <name> -LBSetName <name> | Update-AzureVM

Test ADFS 2FA messages are still being sent and we can now redirect the target of our upgrade work to the second 2FA server, make the upgraded server the master 2FA host. Remove the second 2FA endpoint from the load balancer and run its 2FA MSI upgrade process. Then, update its ADFS adapter and add its endpoint back to the load balancer,

Finally test the AD FS authentication process is still up and sending 2FA SMS messages and we can consider this process complete.

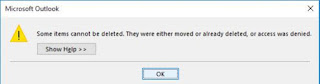

Here is where the upgrade process fell over in a big pile of steaming dung.

We shut down one of the 2FA servers to simulate endpoint failover and tried ADFS logon, only to get a rather helpful error back from the user portal stating that an error had occurred.

Check the log files on the AD FS server and we can see there is a repetitive log entry of:

Event 364:

Exception details: Microsoft.IdentityServer.RequestFailedException: No strong authentication method found for the request from https://XXXXXX. [redacted] at Microsoft.IdentityServer.Web.PassiveProtocolListener

We have an obscure error that Google research is yet to become acquainted with and an in-progress upgrade that cannot easily be backed out of. Squeaky seat time.

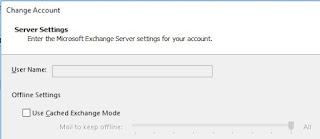

As we had a custom claims rule in place, the MMC gui was unable to display the existing claims rule. This was removed with the following Powershell command:

Get-ADFSRelyingPartyTrust

Effectively resetting the claims rule back to an factory default condition so we can see the GUI is configured correctly.

Review of the log files on the 2FA server suggest that the AD FS adapter being requested is from the previous version of the 2FA server.

Check in the Program Files folder of the 2FA server and there are two Powershell commands to unregister and re-register the AD FS adapters into the 2FA application.

After running these un and register Powershell commands then the ADFS adapters are now recognised by 2FA server and we are no longer seeing An error has occurred message on the user portal when logging on via ADFS.